THE

HISTORY OF COMPUTERS

How Did We Get Here? Technology is certainly changing at a feverish pace. Today, we are in what is considered to be the fourth generation

of technology. Tomorrow’s technology generation will be

characterized by technologies that we grow (called membrane-based

technologies), have intelligence, are minuscule in size, and are

wireless and powerless. As you move forward with technology, it’s often helpful to

have a little historical perspective so you can better understand

how we got here today and the directions in which we may move

tomorrow. This Life-Long Learning Module will help you do just

that. You should understand that, as we moved through various

generations of technology, people today argue about specific dates.

For example, when exactly did we leave the first generation of

technology and start the second generation? We’ll certainly

not debate that here — so our time periods for each generation

start and end in groupings of years. Click on the links below.

Pre-Technology (3000 BC — mid 1940s)

It does help to understand technology from a historical

perspective. Of course, we don’t need to go all the way back

to the stone age and discuss how people used rocks for counting.

But let’s look at some early forms of technology (which are

not really technologies at all as we understand them today). - 3000 BC — the abacus in Asia and most parts of

Europe was widely used as a device for quickly adding, subtracting,

multiplying, and dividing numbers.

<a onClick="window.open('/olcweb/cgi/pluginpop.cgi?it=jpg:: ::/sites/dl/free/0072464011/18618/Image8.jpg','popWin', 'width=NaN,height=NaN,resizable,scrollbars');" href="#"><img valign="absmiddle" height="16" width="16" border="0" src="/olcweb/styles/shared/linkicons/image.gif"> (3.0K)</a> 1642 - Blaise Pascal invented a numerical wheel

calculator. It had eight movable dials, so you could add sums up to

eight figures long. Of course, it was completely manual and could

only add numbers. <a onClick="window.open('/olcweb/cgi/pluginpop.cgi?it=jpg:: ::/sites/dl/free/0072464011/18618/Image8.jpg','popWin', 'width=NaN,height=NaN,resizable,scrollbars');" href="#"><img valign="absmiddle" height="16" width="16" border="0" src="/olcweb/styles/shared/linkicons/image.gif"> (3.0K)</a> 1642 - Blaise Pascal invented a numerical wheel

calculator. It had eight movable dials, so you could add sums up to

eight figures long. Of course, it was completely manual and could

only add numbers.

- 1694 — Gottfried Wilhelm von Leibniz invented a

machine that could also multiply. It is known as Leibniz’s

mechanical multiplier.

- 1820 — Charles Xavier Thomas de Colmar refined

Leibniz’s mechanical multiplier so that it could perform all

the four basic functions of mathematics. It is known as

Colmar’s mechanical calculator.

- 1822 — Charles Babbage invented the Difference

Engine. This machine was powered by steam and could store a

program, perform calculations, and automatically print the results.

In about 1832, with the help of Augusta Ada King, he refined the

Difference Engine and created the Analytical Engine. As a side

note, Ada became such an important historical figure in the

development of technology that the U.S. Defense Department named a

programming language after her in 1980.

<a onClick="window.open('/olcweb/cgi/pluginpop.cgi?it=jpg:: ::/sites/dl/free/0072464011/18618/Image9.jpg','popWin', 'width=NaN,height=NaN,resizable,scrollbars');" href="#"><img valign="absmiddle" height="16" width="16" border="0" src="/olcweb/styles/shared/linkicons/image.gif"> (16.0K)</a> <a onClick="window.open('/olcweb/cgi/pluginpop.cgi?it=jpg:: ::/sites/dl/free/0072464011/18618/Image9.jpg','popWin', 'width=NaN,height=NaN,resizable,scrollbars');" href="#"><img valign="absmiddle" height="16" width="16" border="0" src="/olcweb/styles/shared/linkicons/image.gif"> (16.0K)</a>

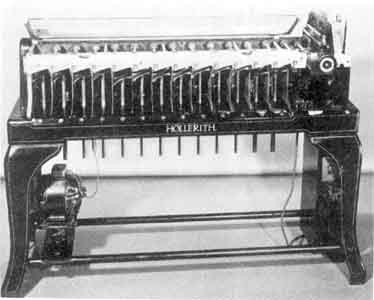

- 1889 — Herman Hollerith developed a machine capable

of reading information from a punched card. This machine was based

on the simple concept of a loom in milling operations. Herman was

an entrepreneur and created the Tabulating Machine Company based on

his punched card concept. The Tabulating Machine Company later

became IBM after a series of mergers in 1924.

- 1940 — John Atanasoff developed the first truly

all-electronic computer. However, John lost funding for his project

and it never fully matured. It did, though, usher in the first

generation of computing technology.

Back to the top First Generation (mid 1940s — mid 1950s) World War II greatly increased the need for technology. All

countries participating in WWII sought to create technologies to

aid in plane and missile designs, code deciphering, and missile

projections. Technology in this first generation was based on vacuum tubes

and magnetic drums for data storage. Their immense size, girth, and

weight required that entire buildings be devoted to just holding

the "computer." Some important dates and people of the first generation of

technology are listed below. - 1941 — Konrad Zuse, a German engineer, built the Z3

computer to design missiles and airplanes.

- 1943 — the British built Colossus, a computer for

decoding German messages.

- 1944 — Howard Aiken, an American, built the Mark I

computer, an all-electronic calculator. It could perform

calculations at a rate of 3 to 5 seconds per calculation.

<a onClick="window.open('/olcweb/cgi/pluginpop.cgi?it=gif:: ::/sites/dl/free/0072464011/18618/Image1.gif','popWin', 'width=NaN,height=NaN,resizable,scrollbars');" href="#"><img valign="absmiddle" height="16" width="16" border="0" src="/olcweb/styles/shared/linkicons/image.gif"> (123.0K)</a> <a onClick="window.open('/olcweb/cgi/pluginpop.cgi?it=gif:: ::/sites/dl/free/0072464011/18618/Image1.gif','popWin', 'width=NaN,height=NaN,resizable,scrollbars');" href="#"><img valign="absmiddle" height="16" width="16" border="0" src="/olcweb/styles/shared/linkicons/image.gif"> (123.0K)</a>

- ENIAC (Electronic Numerical Integrator and Calculator)

— developed by the U.S. government and the University of

Pennsylvania during WWII. It was composed of 18,000 vacuum tubes

and 70,000 resistors. When turned on, it required so much

electricity that it dimmed the lights in an entire section of

Philadelphia.

- EDVAC (Electronic Discrete Variable Automatic Computer)

— developed by John Von Neumann. The EDVAC is significant

because its "stored memory" (holding both information and

instructions) could be stopped at any point and then resumed.

- 1951 — Remington Rand built the UNIVAC I, the first

commercially available computer. The U.S. Census Bureau was the

first to purchase the UNIVAC I.

Back to the top Second Generation (mid 1950s — mid 1960s) In 1948, a new development changed technology and ushered in the

second generation. It was the transistor. Because of the transistor, technology became smaller (relative

to first generation technologies), faster, more reliable, and more

energy-efficient. Up through the mid 1960’s, transistor-based computers

became more widespread in the business world and their applications

increased. Most notably, computers took on the important business

functions of processing financial and accounting transactions. Third-generation programming languages such as COBOL and ForTran

surfaced during this period. This new generation of languages made

it easier and faster to write software. Not the most exciting generation of technology. Back to the top Third Generation (mid 1960s — early

1970s)  <a onClick="window.open('/olcweb/cgi/pluginpop.cgi?it=jpg:: ::/sites/dl/free/0072464011/18618/Image10.jpg','popWin', 'width=NaN,height=NaN,resizable,scrollbars');" href="#"><img valign="absmiddle" height="16" width="16" border="0" src="/olcweb/styles/shared/linkicons/image.gif"> (87.0K)</a> <a onClick="window.open('/olcweb/cgi/pluginpop.cgi?it=jpg:: ::/sites/dl/free/0072464011/18618/Image10.jpg','popWin', 'width=NaN,height=NaN,resizable,scrollbars');" href="#"><img valign="absmiddle" height="16" width="16" border="0" src="/olcweb/styles/shared/linkicons/image.gif"> (87.0K)</a>

The third generation of computer technologies was marked by the

invention of the integrated circuit. The integrated circuit once

again was better in all ways than the transistor. So, we witnessed another period of computer revolution with

smaller, better, more reliable, cheaper, and more energy-efficient

technologies. Computers were now in widespread use at all major corporations.

These computers were known as mainframe computers. Some could

perform as many as 500,000 instructions per second. However, most organizations had only one mainframe computer, and

everyone had to share it. On a daily basis (or perhaps even weekly

or monthly), transactions were "batched" as they came in and then

processed at 2AM. So, "real-time" processing was not yet a

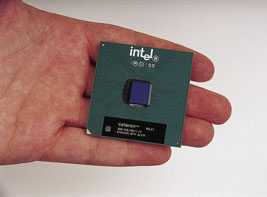

reality. Back to the top Fourth Generation (early 1970s — today)  <a onClick="window.open('/olcweb/cgi/pluginpop.cgi?it=jpg:: ::/sites/dl/free/0072464011/18618/Image11.jpg','popWin', 'width=NaN,height=NaN,resizable,scrollbars');" href="#"><img valign="absmiddle" height="16" width="16" border="0" src="/olcweb/styles/shared/linkicons/image.gif"> (215.0K)</a> <a onClick="window.open('/olcweb/cgi/pluginpop.cgi?it=jpg:: ::/sites/dl/free/0072464011/18618/Image11.jpg','popWin', 'width=NaN,height=NaN,resizable,scrollbars');" href="#"><img valign="absmiddle" height="16" width="16" border="0" src="/olcweb/styles/shared/linkicons/image.gif"> (215.0K)</a>

Here we are today (and already moving into the next

generation). The fourth generation of technology is based on VLSI, or

very-large scale introduction, and ULSI, or ultra-large scale

integration. In the 1970’s, organizations began to purchase more than

one computer. These new smaller, faster, and cheaper computers were

known as minicomputers. With this new technology basis,

organizations could now split processing capabilities (and software

and information) and locate it within various functional units.

Personal computers sitting on desktops were still not a

reality. But that changed in the late 1970’s and early 1980’s.

Although other companies first brought microcomputers to the

market, IBM is credited with officially ushering in the

microcomputer with its introduction of the IBM PC in 1981. The IBM

PC was capable of performing an amazing 330,000 instructions per

second, all while sitting on a desktop (something no one ever

thought would happen). Ten years later in 1991, the number of microcomputers stood at

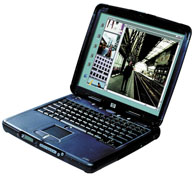

an unbelievable 65 million.  <a onClick="window.open('/olcweb/cgi/pluginpop.cgi?it=jpg:: ::/sites/dl/free/0072464011/18618/Image12.jpg','popWin', 'width=NaN,height=NaN,resizable,scrollbars');" href="#"><img valign="absmiddle" height="16" width="16" border="0" src="/olcweb/styles/shared/linkicons/image.gif"> (130.0K)</a> <a onClick="window.open('/olcweb/cgi/pluginpop.cgi?it=jpg:: ::/sites/dl/free/0072464011/18618/Image12.jpg','popWin', 'width=NaN,height=NaN,resizable,scrollbars');" href="#"><img valign="absmiddle" height="16" width="16" border="0" src="/olcweb/styles/shared/linkicons/image.gif"> (130.0K)</a>

And from there, you know most of the story. Apple battled IBM.

Both eventually lost to IBM-compatibles like Dell and Gateway.

Microsoft sprung up and captured the operating system market. It

would not be until about 1996 that Microsoft controlled the word

processing, spreadsheet, presentation, and database personal

productivity software markets. We also began to network computers together, first with local

area networks (LANs) and then with metropolitan area networks

(MANs) and wide area networks (WANs). Today, we have Web-accessing and light-weight notebooks, PDAs,

and cell phones. We can’t imagine life without them. We also witnessed in the 1990s the birth of the World Wide Web

as we know it today (the Internet had been around for many years

under government control). Back to the top The Generation to Come (today — tomorrow) In the generation to come (which has already started),

we’ll see some of the most unbelievable and dramatic changes.

Heretofore, changes in technology have really just focused on

speed, size, and other efficiency issues. While those are all

important, tomorrow’s technology will have more far-reaching

consequences. Key topics here include: - Intelligence

- Size

- Growing technologies

- Wireless

- Powerless

We’ll only briefly discuss these here. To learn more about the future of technology, read Life-Long Learning Module F Computers in

Your LifeTomorrow. To learn more about some really new and exciting technologies

that are surfacing even as we speak, read Life-Long Learning Module E New

Technologies Impacting Your Life. Intelligence The most dramatic and far-reaching change in the technology of

tomorrow is that it will have true intelligence. Right now, the key

term in "artificial intelligence" is artificial. In the future generation of technology, you’ll have

intelligent technologies that learn right along with you throughout

your life. Can you imagine getting a computer at birth that becomes your

life-long companion? Size  <a onClick="window.open('/olcweb/cgi/pluginpop.cgi?it=jpg:: ::/sites/dl/free/0072464011/18618/Image13.jpg','popWin', 'width=NaN,height=NaN,resizable,scrollbars');" href="#"><img valign="absmiddle" height="16" width="16" border="0" src="/olcweb/styles/shared/linkicons/image.gif"> (97.0K)</a> <a onClick="window.open('/olcweb/cgi/pluginpop.cgi?it=jpg:: ::/sites/dl/free/0072464011/18618/Image13.jpg','popWin', 'width=NaN,height=NaN,resizable,scrollbars');" href="#"><img valign="absmiddle" height="16" width="16" border="0" src="/olcweb/styles/shared/linkicons/image.gif"> (97.0K)</a>

Needless to say, technology will become increasingly smaller,

and — at the same time — more powerful and cheaper. Of course, decreases in size leads to more portability. And you

need to rethink your version of portability. Technology will become

so small that your wrist watch may have a CPU capable of performing

20 billion instructions per second. And you may wear some of your

technology in your clothes (we discussed this possibility in

Chapter 9). Growing Technologies As it stands right now, technology is based on metals and

artificial component parts. Tomorrow’s technology will be grown in the laboratory and

will be similar to human tissue. That’s right. Researchers are

working diligently on growing tissue and controlling the

information each individual cell contains. Don’t worry — this is not genetic cloning or anything

like that. Think of the immense capacity of the human brain and its

relatively small size. If we can develop technologies that are

membrane-based, size will not be an issue at all. Read more about

membrane-based technologies in Life-Long Learning Module F

Computers in Your Life Tomorrow. Wireless Wireless technologies are a "no brainer." We’ve all seen it

coming. What we haven’t seen is high-speed, reliable wireless

technology. That should come to pass within the next 5 years or so on a

widespread basis. Once again, when it does, we’ll have to

rethink our version of portability. Powerless One of the significant drawbacks to technology that most people

simply accept as "a part of life" is that technology needs power,

either directly from the wall or from a battery. Of course, all electrical devices need power. So, we simply

accept that they must have a battery or that we must plug them into

a wall outlet. In the coming years, you can expect to see dramatic changes in

the way in which we "power" our technology. In the short term,

expect to begin seeing batteries that have a longer life. That is,

we believe that within the next couple of years battery-powered

notebooks will be able to operate for more than 24 hours before

needing recharging. In the longer term, we believe that we’ll be able to

harness the electrical energy of the human body. Did you know that

the human body produces enough electrical power to support all the

electrical devices of a typical home? Of course, we’ll have to determine how to get that

electrical energy from our bodies and into a computer. But

you’ll most likely see it come to pass in your lifetime. Back to the top

|

2002 McGraw-Hill Higher Education

2002 McGraw-Hill Higher Education

2002 McGraw-Hill Higher Education

2002 McGraw-Hill Higher Education